Leave-one-out Cross-validation Which Model Performs Best

This CV technique trains on all samples except one. Each sample is used once as a test set singleton while the remaining samples form the training set.

A Quick Intro To Leave One Out Cross Validation Loocv

Bayesian Leave-One-Out Cross-Validation Approximations for Gaussian Latent Variable Models Aki Vehtari akivehtariaaltofi Tommi Mononen Ville Tolvanen Tuomas Sivula Helsinki Institute of Information Technology HIIT Department of Computer Science Aalto University POBox 15400 00076 Aalto Finland Ole Winther owiimmdtudk Technical.

. Our paper Beleites C. Illustrated above are the types used in common. Leave-one-out cross-validation LOOCV is an exhaustive cross-validation technique.

From sklearnmodel_selection import cross_val_score mycv LeaveOneOut cvscross_val_score best_clf features_important y_train scoringr2cv mycv mean_cross_val_score cvsmean print mean_cross_val_score This will return the mean cross-validated R2 score using LOOCV. However with cross-validation one computes a statistic on the left-out sample s while with jackknifing one computes a. Assessing and improving the stability of chemometric models in small sample size situations Anal Bioanal Chem 2008 390 1261-1271.

In machine learning and data mining k-fold cross validation sometimes called leave-one-out cross-validation is a form of cross-validation in which the training data is divided into k approximately equal subsets with each of the k-1 subsets used as test data in turn and the remaining subset used as training data. Cvglm Each time Leave-one-out cross-validation LOOV leaves out one observation produces a fit on all the other data and then makes a prediction at the x value for that observation that you lift out. Leave-one-out cross-validation LOO and the widely applicable information criterion WAIC are methods for estimating pointwise out-of-sample prediction accuracy from a fitted Bayesian model using the log-likelihood evaluated at the.

It is a K-Fold CV where K N where N is the number of samples in the. We then can compare the calculated measures to see which model and predictor performs best in terms of cross-validation. Stratified K-Fold Cross Validation.

The parameter optimisation is performed automatically on 9 of the 10 image pairs and then the performance of. LOOCV Leave One Out Cross Validation In this method we perform training on the whole data-set but leaves only one data-point of the available data-set and then iterates for each data-point. It provides a much less biased measure of test MSE compared to using a single test set because we repeatedly fit a model to a dataset that contains n-1 observations.

It requires one model to be created and evaluated for each example in the training dataset. We will train the model without this validation set and later test whether it correctly classify the observation. An advantage of using this method is that we make use of all data points and hence it is low bias.

The leave-one-out cross validation LOO-CV which is a model-independent evaluate method cannot always select the best of several models when the sample size is small. Other suggested solutions to the validation question include the use of statistics that may require special consideration of censored observations 24. It has some advantages as well as disadvantages also.

Leave- one -out cross-validation LOOCV is a particular case of leave- p -out cross-validation with p 1The process looks similar to jackknife. In LOO one observation is taken out of the training set as a validation set. This method is similar to the leave-p-out cross-validation but instead of p we need to take 1 dataset out of training.

Repeated cross validation of a variety that leaves out more than one case at a turnper fold or many other resampling validation schemes allow to directly assess model instability. This process repeats for each datapoint. I have a training-set Xtrain consisting of two years of data and Xtest consisting of 1 year of data.

We modify the LOO-CV method by moving a validation point around random normal distributionsrather than leaving it outnaming it the move-one-away cross validation MOA-CV which is a. The Leave One Out Cross-Validation LOOCV approach has the advantages of producing model estimates with less bias and more ease in smaller samples 5. In LOOCV fitting of the model is done and predicting using one observation validation set.

Leave-one-out cross-validation in R. It tends not to overestimate the test MSE compared to using a single test set. Lets know about them.

Leave-one-out cross-validation offers the following pros. It is a category of LpOCV with the case of p1. LOOCV is an extreme version of k-fold cross-validation that has the maximum computational cost.

K is often 10 or 5. This is a variant of LPO. One commonly used method for doing this is known as leave-one-out cross-validation LOOCV which uses the following approach.

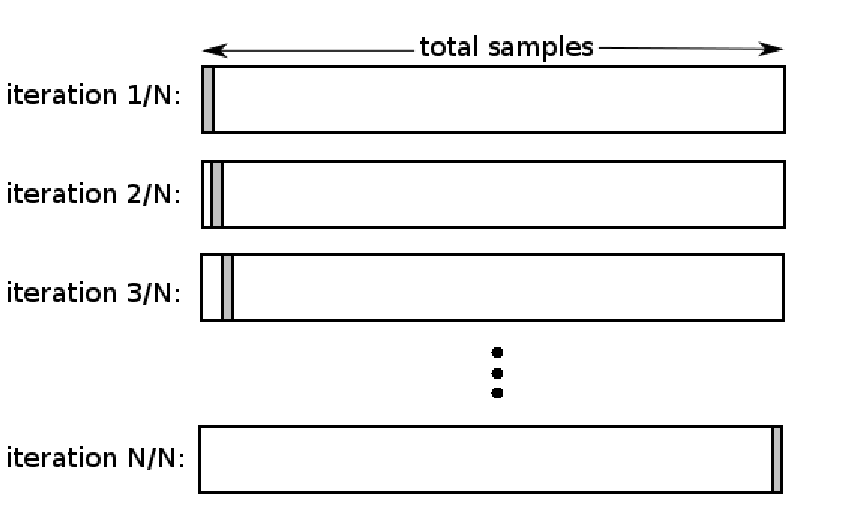

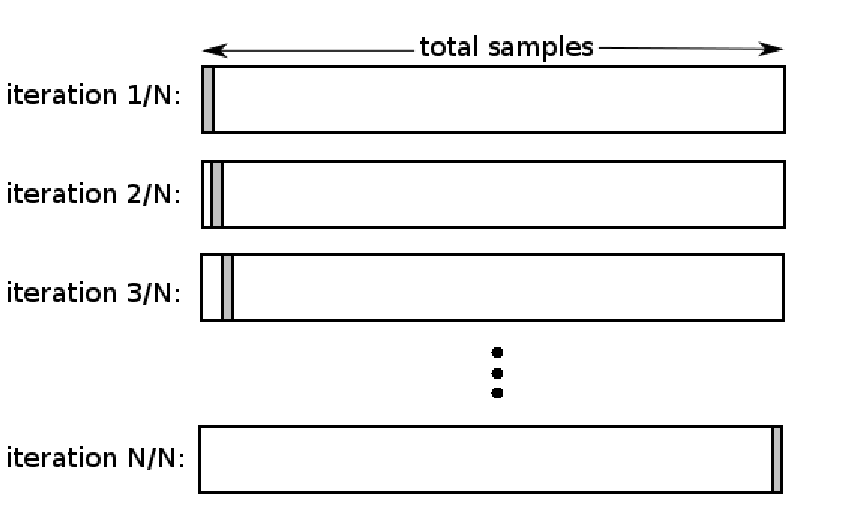

Leave-one-out cross-validation or LOOCV is a configuration of k-fold cross-validation where k is set to the number of examples in the dataset. LOOCV Leave One Out Cross-Validation is a type of cross-validation approach in which each observation is considered as the validation set and the rest N-1 observations are considered as the training set. Split a dataset into a training set and a testing set using all but one observation as part of the training set.

Leave 1 out cross validation works as follows. I would like to create a rolling leave-one-out to. From the below table we see that it is in fact the square-root transformed model that performs best in terms of cross-validation with the median predictor obtaining the lowest LOOCV measure.

This is very old technique which is replaced by k-fold and stratified k. Provides traintest indices to split data in traintest sets. When p 1 in Leave-P-Out cross-validation then it is Leave-One-Out cross-validation.

It means in this approach for each learning set only one datapoint is reserved and the remaining dataset is used to train the model. Leave one out cross-validation. Build a model using only data from the training set.

Source LOOCV operations For a dataset having n rows 1st row is selected for validation and the rest n-1 rows are used to train the model. Leave-one-out cross-validation puts the model repeatedly n times if theres n observations.

A Quick Intro To Leave One Out Cross Validation Loocv

A Quick Intro To Leave One Out Cross Validation Loocv

Cross Validation Explained Evaluating Estimator Performance By Rahil Shaikh Towards Data Science

Comments

Post a Comment